Governing AI with a Robot in the Room

Some more thoughts from Imagination in Action and Beyond

My second appearance at Imagination in Action was on Michael Anton Dila’s panel discussion on “How to Govern AI.” Kem-Laurin Lubin and Malur Narayan were my fellow panelists. You can watch a video of the panel here.

As I joked at the start of the panel, I am not really a “governance” guy. I was there because I wrote about a thought experiment in which I wondered how we might leverage AI to help us create a comprehensive, forward-looking, and inclusive AI governance mechanism.

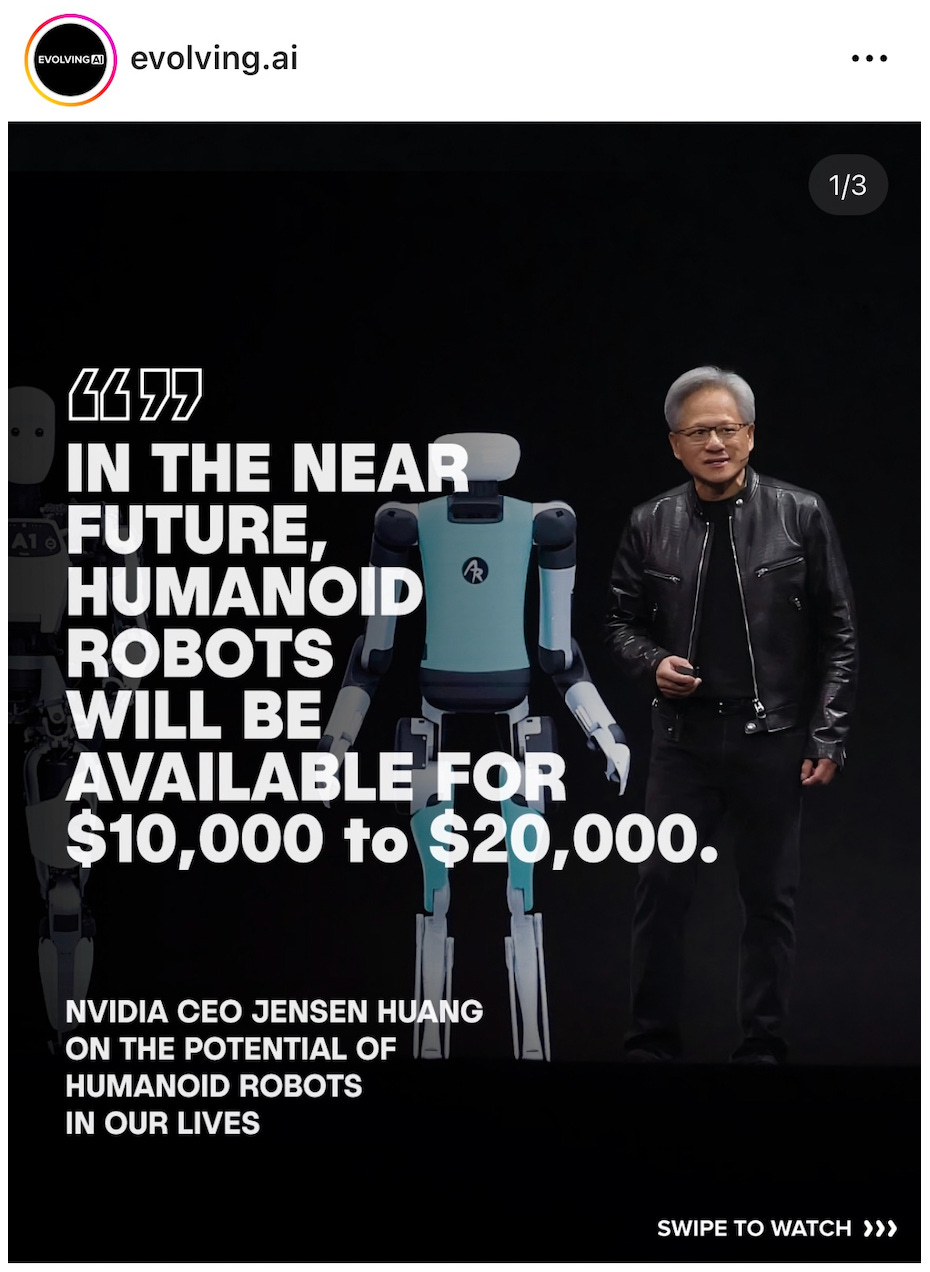

In our panel’s discussion, I thought that Michael, Kem-Laurin, and Malur addressed AI in ways that I think are important and in ways that I had not considered before…and primed me for thinking through NVIDIA CEO Jensen Huang’s assertion that we’d be buying humanoid robots for $10,000 - $20,000 in the near future.

Do our expectations of AI shift based on the form factor of the user interface?

Michael Anton Dila, Oslo for AI

Michael is the driving force behind Oslo for AI, an effort that brings a diverse body of experts together in a new kind of constitutional process to think through and co-design governance models and mechanisms for AI and other pervasive technologies.

Michael started off the panel with three questions:

Do you think that intelligence is well understood?

Do you think that we have a well-established consensus on the potential harm of AI systems?

Do you believe that we have existing models of governance capable of regulating emerging technologies?

The funny (but unsurprising) thing? Few people in the audience signaled agreement with any of Michael’s questions.

Kem-Laurin Lubin, Designer and Ph.D. Candidate at the University of Waterloo

Kem-Laurin focuses on computational rhetoric and, while we were on the panel, said something that really caught my attention and imagination: we need a new language to talk about AI given its potential to be a transformative technology on a planetary scale.

This resonated with me because I am frequently frustrated by the abstract nature of conversations about AI. The lack of defined scope (or boundaries) allows for easy agreement on or casual disagreements over an amorphous and rapidly evolving concept.

Now when I listen to a talk about AI or talk about AI, I frame what I am hearing (or saying) in terms of the type of AI, how it is being used, and its users. For me, most of my thinking and writing revolves around generative AI used by (or in support of) analytic thinkers to uncover useful information or insights that allow them to tell their audiences clear and compelling stories about the world around us based on reliable source material. There’s hundreds of other combinations of AI / ML technologies, use cases, and user types out there…and almost certainly contains combination-unique nuances (and likely come with language borrowed from each element of the combination).

Thinking about Kem-Laurin’s point, though, terms like “trustworthy” and “explainable” are no less abstract that “AI.” My question now is what do those terms mean in the context of any given combination of technology / use case / user and are they sufficient for concepts and ideas that we’re trying to communicate?

Malur Narayan, Latimer

Malur is a serious tech thinker and Technology Advisor at Latimer. Latimer is developing a large language model trained on black history and black culture with the goal of ensuring that the information underpinning its gen AI is more inclusive and less biased. Malur’s talk, and the work he is doing with Latimer really resonated with me: for as much as I think about information—the data that makes up a large language model (LLM), the technology fueling generative AIs like ChatGPT, Gemini, and Claude—from the perspective of a recovering U.S. intelligence analyst, Malur’s talk gave me a much-needed reminder to think much more broadly about the data underpinning an AI: absent a conscious effort to train AIs on a diverse array of voices and perspectives, will LLMs simply represent the lowest common denominator of recent modern thinking? How will we communicate the evolution of thinking across and between cultures over time?

AI Today and Tomorrow

Today, while cleaning out my “to read” file, I came across another item that reminded me that I don’t always think broadly enough about applied AI. I tend to think of AI in the context of search, discovery, sensemaking, and storytelling—functions central to the work of an analyst analytic thinker. NVIDIA CEO Jensen Huang prompted me to think more broadly and creatively:

When I considered Huang’s assessment alongside this video of Boston Dynamics’ new Atlas robot, my conception of AI governance suddenly became far less philosophical and far more pragmatic.

When I am thinking about AI in the context of analytic work, I think largely in terms of questions like “What information is the AI using to generate its response to my query?”, “What degree of confidence does the gen AI have in its answer and how did it determine its confidence rating?”, “Is the information the AI draws on comprehensive enough that I should have confidence in its response?”, “Is its analytic approach to compile an answer methodologically and logically sound?”, etc.

Creating a governance mechanism for AI doing work is likely an organizational choice that integrates corporate data access and usage rules and with organizational standards and values.

In short, I feel like governing AI at work is a whole lot of “TBD” as we learn about what it is like to have AI at work.

When I think about an AI embodied in a humanoid robot that is a resident of my home, my thinking expands significantly.

Given what I assume will be a robust sensor suite, what measures are put in place to protect the privacy of home’s residents and guests?

How will a home robot’s AI protect itself against viruses or hacking?

How does the robot’s AI interact with other smart technologies in the home?

How does the robot’s AI interact with regulatory regimes like HIPAA or ACH financial transaction?

How does the robot’s AI interpret local, state, and federal law? What constitutes robot AI’s “duty to act”?

Right now, we seem to be in an era of siloed, general purpose AIs. Will this change if more smaller but highly specialized AI’s are brought to market? Will a domestic robot’s AI be monolithic or modular and, if modular, will the modules be certified for compliance with the appropriate regulatory regimes? If an ecosystem grows around a home robot, will the ecosystem be more in line with the tight controls imposed by Apple in its App Store or will the controls be looser?

Aside from my last question, the form factor doesn’t really matter…and, the more I think about it, I wonder how we might incorporate corporate and personal AIs into existing regulatory regimes…or bring them into compliance with existing professional standards (to include the ethics of those professional organizations)? More broadly, though, I find myself circling back to Kem-Laurin’s point about needing a new language to talk and think about the emergence of transformational algorithms such as AI and Malur’s point about having insight into the information (and thinking that underpins) any AI should be considered in terms of its likely and potential downstream effects.

How and to what degree does the setting or context in which an AI is used…or its form factor and user interface…affect how you think about AIs and your relationships with the