Data Scarcity is Not Imminent

Refining it into high-quality data is a different story

Last year, Pablo Villalobos, Jaime Sevilla, Lennart Heim, Tamay Besiroglu, Marius Hobbhahn, and Anson Ho wrote “Will we run out of data? An analysis of the limits of scaling datasets in Machine Learning”. In the paper’s abstract, Villalobos et al. made the attention-grabbing claim that “Our analysis indicates that the stock of high-quality language data will be exhausted soon; likely before 2026.” That sentence—rightfully—received a lot of attention. The thing is that it was only half of Villalobo et. Al’s finding. The very next sentence read: “By contrast, the stock of low-quality language data and image data will be exhausted only much later; between 2030 and 2050 (for low-quality language) and between 2030 and 2060 (for images).”

High- Versus Low-Quality Data

To start with, Villalobos and Co.’s described how they thought of high-quality data:

“To identify high-quality data we defer to the expertise of practitioners and look at the composition of the datasets used to train large language models. The most common sources in these datasets are books, news articles, scientific papers, Wikipedia, and filtered web content.

A common property of these sources is that they contain data that has passed usefulness or quality filters. For example, in the case of news, scientific articles, or open-source code projects, the usefulness filter is imposed by professional standards (like peer review). In the case of Wikipedia, the filter is standing the test of time in a community of dedicated editors.

In the case of filtered web content, the filter is receiving positive engagement from many users. While imperfect, this property can help us identify additional sources of high-quality data, so we will use it as our working definition of high-quality data.”

The kicker?

“…the generation rate [of high-quality data] is not determined by human population or internet penetration but by the size of the economy and the share of the economy devoted to creative sectors (like science and art).”

So, if you want more high-quality data, we need to make sure that the economic incentives are there to encourage thoughtful people to generate high-quality content / data. In this, the liberal arts—especially journalism, commentary, and literary, artistic, and political criticism—are noticeably absent in Villalobos and Co.’s parenthetical.

Low-quality data is undefined and I am inferring it to be “not high-quality data.” The irony is that when I think about data and the interactions I envision for people and AIs, Villalobos et al.’s definition of high quality data is likely to fall short: there is plenty of room (read: work to be done) to improve data quality in ways that will improve the sophistication of AIs.

The Looming Industry for Refining High-Quality Data

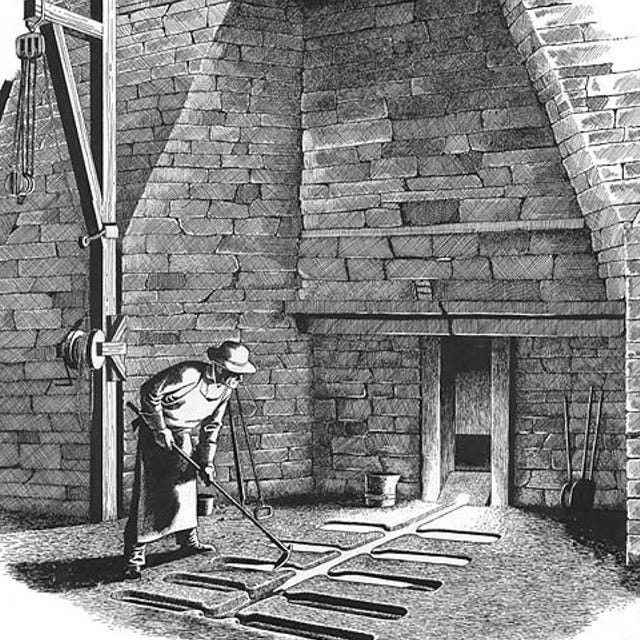

Thinking about data as a resource resonates with me: we have made due with information in whatever form it existed for the entirety of humanity’s existence. Cave drawings gave way to tablets that gave way to scrolls gave way to books gave way to websites; songs and epic poems and speeches and public discussions and debate and theatrical productions all persist. We often think of data as a resource but, in order for use to maximize the utility and power of that resource, we’re going to need to start refining it at an industrial scale.

In thinking about high-quality data, I see it as a multidimensional problem and opportunity:

Format and structure. When we talk about data, we often think in terms of format and structure. Format changes both organically (someone comes up with a way to store and transmit information that is better than what preceded the dominant—soon to be legacy—solution). I am happy to get shouted down here because this is the purview of technologists. My question revolves around structure: having managed a couple data migrations from one format to another, the structure often changed with the addition, deletion, or renaming of fields. To answer the question of high-quality data, I think we start with the user experience: how might people interact with and explore information and, based on those projections, what might high-quality data look like?

Substantive quality of the data. Villalobos et al.’s assertion that one hallmark of high-quality data was “positive engagement from many users” made me cringe. Why? The Carsey School of Public Policy at the University of New Hampshire found in 2022 that ten percent of over 1,100 respondents believed that the Earth was flat. To what degree should the “positive engagement” of that cohort inform a determination of quality (especially as AIs begin to supplant search)? Spoiler alert: Not at all. Engagement has no relation to quality. None whatsoever. Who produced the information with what methodology and under what professional norms, what biases were at play during the creation of the data (or informed the later use and misuse of that data), and how the information was received and used by other experts and informed thinkers over time, and are—should be—be considerations in evaluating the substantive quality of data.

High-quality data is critical to AI applications. Moreover, all language data can be refined in ways that improve its quality. Cleaning and enriching data is a tedious, thankless, and fundamentally unsexy task. For every thousand dollars invested in AI, imagine if $10 were put into data structuring, clean-up, and enrichment and another $10 into supporting organizations producing high-quality content (in terms of substance and professional rigor if nothing else).